뉴펀들랜드에 사는 한 할머니는 손자가 교통사고를 당했다며 보석금을 부탁하는 다급한 목소리를 들었습니다 그녀는 5만 달러를 송금한 뒤에야 손자가 실제로는 직장에서 무사히 일하고 있었다는 사실을 알게 되었습니다. 그 목소리의 정체는? 손자의 소셜미디어 영상으로 만들어진 AI 음성 복제였습니다.

같은 주에 그녀가 사는 지역의 다른 주민들도 똑같은 수법의 사기에 걸려 20만 달러를 잃었습니다 2023년에는 60세 이상 미국인 10만 명 이상이 총 34억 달러에 달하는 사기 피해를 신고했으며, 이는 전년 대비 14% 증가한 수치입니다. 이러한 급증은 사이버 보안 위협의 본질적 변화를 보여줍니다. 단순히 인증정보를 훔치는 수준을 넘어, 이제는 ‘인간성’ 자체를 훔치는 시대가 온 것입니다.

현재의 사기 방지 시스템이 실패하는 이유

공공 자원 봇 악용

2023년 캘리포니아에서는 5만 건이 넘는 봇 기반의 허위 학자금 지원 신청이 적발되었습니다. 톰슨로이터(Thomson Reuters)의 소송 합의 포털은 자동화된 청구 요청으로 마비되어 실제 피해자들이 접근하지 못했습니다. 재난 구호부터 정부 지원금까지, 봇 군단이 원래 사람들을 위해 마련된 자원을 조직적으로 고갈시키고 있습니다.

합성 신원의 사칭

온라인에 영상을 올린 사람이라면 누구나 음성 복제의 위협을 받을 수 있습니다. 필라델피아의 한 변호사는 사기범들이 AI로 만들어낸 자신의 ‘아들’, ‘공공 변호사’, ‘법원 관계자’의 목소리에 속아 보석금을 송금할 뻔했습니다. 홍콩에서는 직원들이 CFO와 동료들의 딥페이크 영상 통화에 속아 2,500만 달러를 잃었습니다. 2023년 한 해 동안 딥페이크 사기 시도는3,000%로 급증했으며, 이제 모든 통화와 영상은 ‘저 사람이 정말 내가 아는 그 사람일까?’하는 의심을 동반하게 되었습니다.

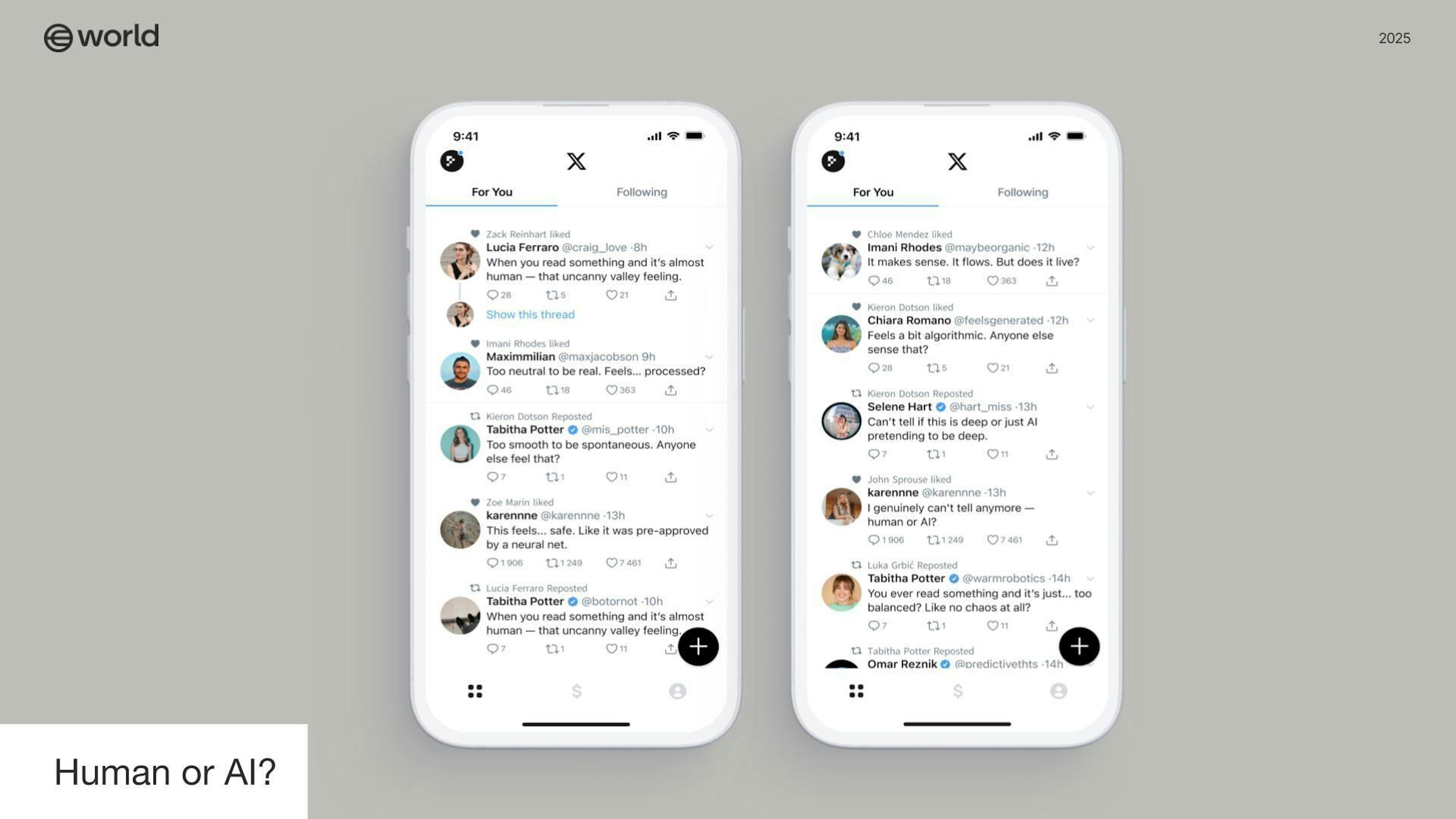

가짜 프로필을 통한 신뢰 네트워크 붕괴

데이팅 앱들은 전체 사용자 중 10~15%가 가짜 프로필이라고 보고했습니다 비즈니스 네트워크에는 AI가 생성한 이력서가 넘쳐나고, 리뷰 플랫폼들은 하룻밤 사이에 기업을 무너뜨릴 수 있는 봇 캠페인과 싸우고 있습니다. 이제는 여론조차 신뢰하기 어렵습니다. 2025년 8월 크래커배럴(Cracker Barrel)이 로고를 변경했을 때, 초기 소셜미디어 분노의 44.5%가 봇에 의해 생성된 것으로 드러났습니다. 자동화된 계정들이 실제 비판을 과장·조작해 여론을 폭발시켰고, 주가 하락까지 초래했습니다. 여론이 인공적으로 조작될 수 있다면, 기업이나 공동체는 과연 어떻게 진짜 결정을 내릴 수 있을까요?

민주적 절차 조작

봇 군단은 공공 의견 수렴 시스템을 장악하고, 여론조사를 조작하며, 마치 시민이 자발적으로 만든 것처럼 보이는 운동을 인위적으로 만들어냅니다. 이들은 규제 피드백과 청원 시스템을 가짜 합의로 마비시킵니다. 한 사람이 봇 팜(bot farm)을 이용해 수천 명의 목소리를 흉내 낼 수 있다면, ‘1인 1표’ 원칙 위에 세워진 민주 제도는 무너질 수밖에 없습니다.

신원 합성 및 탈취

범죄자들은 실제 정보와 위조 정보를 섞어 합성 신원을 만들어 수년간 탐지되지 않은 채 신용 기록을 쌓습니다. 소셜 엔지니어링과 합성 미디어가 결합되어 ‘비밀번호 찾기’ 절차조차 ‘인생을 도난당하는’ 통로가 되고 있습니다.

현재의 방어 체계가 한계에 부딪히는 이유

기존 보안 시스템은 “올바른 비밀번호를 알고 있나요?”, “이 SMS를 받을 수 있나요?” 같은 질문을 던집니다. 그러나 이런 방식은 사용자가 이미 인간이라는 전제를 깔고 있습니다. 문을 잠그는 데만 집중할 뿐, 그 문을 통과하는 존재가 사람인지 정교한 프로그램인지는 확인하지 않습니다. 이제 필요한 것은 근본적인 변화입니다. 보안의 기초 단계에서부터 ‘고유한 인간임을 증명하는’ 시스템을 세워야 한다는 것입니다. 이는 곧 다음을 의미합니다.

- 프라이버시 중심 인증 – 개인 정보를 공개하지 않고도 자신이 고유한 인간임을 증명합니다. 암호학적 증명을 통해 감시 없이 인간성을 입증할 수 있습니다.

- 범용 상호운용성 – 하나의 인증으로 모든 서비스를 이용할 수 있어, 반복 인증을 없애면서도 서비스 간 추적은 방지합니다.

- 사기 방지형 설계 – 도난될 수 있는 비밀번호와 달리, 인간 증명은 위조되거나 복제될 수 없는 검증을 제공합니다.

- 전 세계적 접근성 – 기기나 기술 수준에 상관없이, 누구나 어디서든 사용할 수 있어야 합니다.

온라인 신뢰를 높이기 위한 실제 사람들의 네트워크 구축

World ID는 이러한 원칙을 인간 증명(Proof of Human) 기술을 통해 구현합니다. 한 번의 고유한 인간 인증으로, 사용자는 다른 모든 참여자가 실제 인간임을 확신하며 다양한 서비스에서 안전하게 상호작용할 수 있습니다.

AI 기술이 빠르게 발전함에 따라, 견고한 인간 인증 시스템을 구축할 수 있는 시간은 점점 줄어들고 있습니다. 지금 인간 증명(Proof of Human)을 도입하는 조직만이 진짜 고객을 보호하고, 실제 사용자를 지원하며, 진정한 신뢰를 유지할 수 있습니다. 기계가 인간을 완벽히 모방할 수 있는 시대에, ‘우리가 인간임을 증명하는 것’이야말로 모든 의미 있는 온라인 상호작용의 기반이 됩니다.

이번 10월은 사이버 보안 인식의 달입니다. 보다 인간적인 인터넷을 만드는 방법에 대해 world.org에서 자세히 알아보세요.