Combater contas falsas com a World

A confiança e as experiências dos consumidores online estão a piorar à medida que o "AI slop" (conteúdo de baixa qualidade produzido pela IA) toma conta da nossa realidade.

Nas redes sociais, plataformas de jogos e mercados online, é cada vez mais difícil saber com o que ou com quem estás a interagir. As contas podem representar pessoas reais… ou bots… ou uma das muitas "personas" controladas pelo mesmo ator. As suas publicações, respostas e interações podem parecer reais, mas todos estamos a ver muito mais conteúdo criado pela inteligência artificial todos os dias.

Porquê? Os bots de IA começaram a fazer parte desta realidade.

No passado, os bots eram frequentemente vistos da mesma forma que os e-mails de spam: irritantes, mas algo que podíamos filtrar e ignorar. Com a IA envolvida, os bots tornaram-se exponencialmente melhores a aparentar e parecer humanos. Produzem conteúdo realista, participam em conversas e podem levar as pessoas a aceitar os seus pedidos de amizade.

Quer estejam a dirigir a tua atenção para websites de baixa qualidade cheios de anúncios, a tentar enganar-te para partilhares informações privadas ou a vender-te o mais recente "gadget" imprescindível, os dados mostram que cada vez mais pessoas confundem estes bots com pessoas em quem podem confiar.

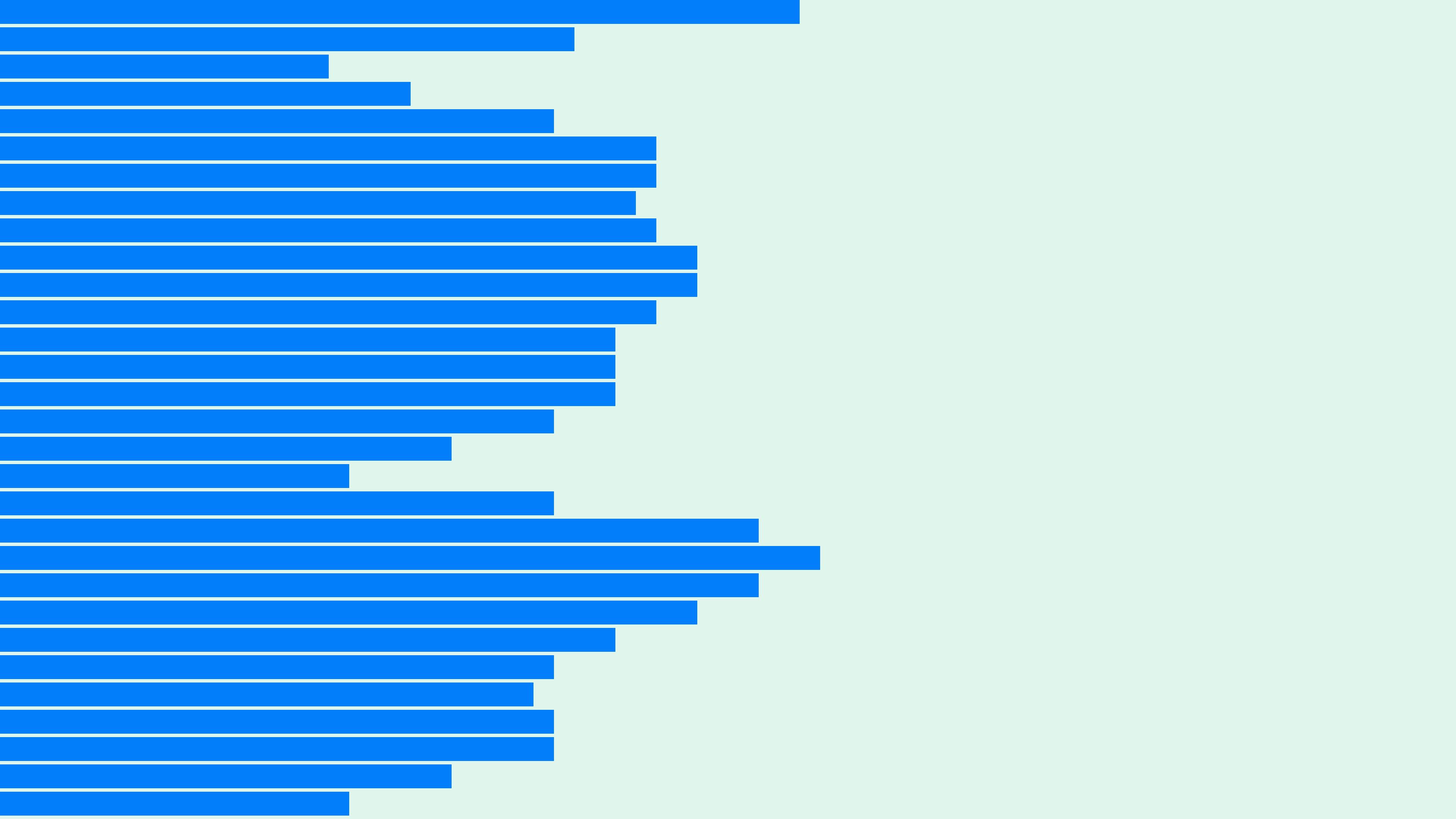

Um inquérito recente encomendado pela World a 2000 adultos americanos descobriu que:

- Mais de três quartos dos inquiridos dizem que a Internet "nunca esteve pior" em termos de diferenciação entre conteúdo real e artificial

- Os inquiridos acreditam que metade das notícias e artigos que veem online apresentam algum elemento de IA

- Apenas 30% dos inquiridos conseguiram distinguir entre avaliações de negócios escritas por humanos e as escritas por IA

Porque as soluções atuais não funcionam

Quando se trata de combater contas falsas na Era da IA, as plataformas estão a travar uma luta inglória. As ferramentas automáticas facilitam a criação de endereços de e-mail em massa e depois usam-nos para gerar contas de spam ilimitadas nas plataformas. Como a IA está a tornar-se mais avançada, estes bots comportam-se cada vez mais como humanos, o que os torna difíceis de detetar e desativar.

Isto é mais do que um problema de moderação de conteúdo ou uma decisão sobre as políticas da plataforma. É uma questão sistémica que afeta a confiança social, o discurso cívico, os direitos dos consumidores e a conformidade normativa. E a infraestrutura atual não está preparada para lidar com o que vem a seguir.

Sem novas ferramentas para verificar a autenticidade online, os governos irão recorrer a instrumentos de regulação rígidos, muitas vezes com consequências não intencionais, como a recolha excessiva de dados pessoais (o que pode paradoxalmente agravar o problema ao dar aos bots de IA mais dados para se treinarem), a exclusão de populações desfavorecidas ou a fragmentação nacional da Internet.

Uma solução melhor: o World ID

As plataformas online não têm de verificar documentos de identificação do governo ou integrar KYC para manter os bots fora das suas plataformas; nem sequer precisam realmente de saber quem és. E como a IA pode contornar CAPTCHAs e falsificar identidades com facilidade, essa abordagem não funcionará de qualquer forma. Em vez disso, as plataformas podem integrar o World ID nos seus sistemas de início de sessão, tal como a Razer fez com os videojogos.

O World ID oferece uma abordagem fundamentalmente diferente das soluções atualmente disponíveis. Permite que as plataformas verifiquem que um indivíduo é uma pessoa real e única - mas sem recolher dados pessoais, exigir integração no estilo KYC ou sujeitar os utilizadores a experiências de alta fricção como o CAPTCHA.

Implementar o World ID em plataformas online permite:

- Interações humano-a-humano: Os consumidores podem confiar que as contas com as quais interagem são controladas por humanos reais.

- Mais confiança nas plataformas: As plataformas online podem voltar a facilitar as interações humanas, o que também ajuda a restaurar a confiança nas suas economias de plataforma.

- Risco reduzido para os dados pessoais: Os indivíduos não precisam de partilhar dados pessoais, por isso as plataformas não têm de se preocupar com a possibilidade de os perder.

- Resistência à IA: O World ID oferece uma abordagem centrada no ser humano para a autenticação que a IA simplesmente não consegue falsificar.

Hoje, as pessoas estão a navegar em espaços online que parecem menos seguros, menos reais e menos justos. O World ID oferece uma abordagem mais inteligente e mais humana - restaurando a confiança online e ajudando as plataformas a manterem-se abertas e seguras num mundo baseado na IA.

Artigos relacionados

Understanding World ·

Policy ·

The economic benefits of proof of human

Announcements ·

Policy ·

A Safer Internet Starts with Proof of Human

Comunicados ·

Política ·

Credenciais do World ID, com acesso a tokens WLD, agora disponíveis em mais países

Política ·